Python for Data Engineering: Building Scalable Data Pipelines

Python for Data Engineering: Building Scalable Data Pipelines

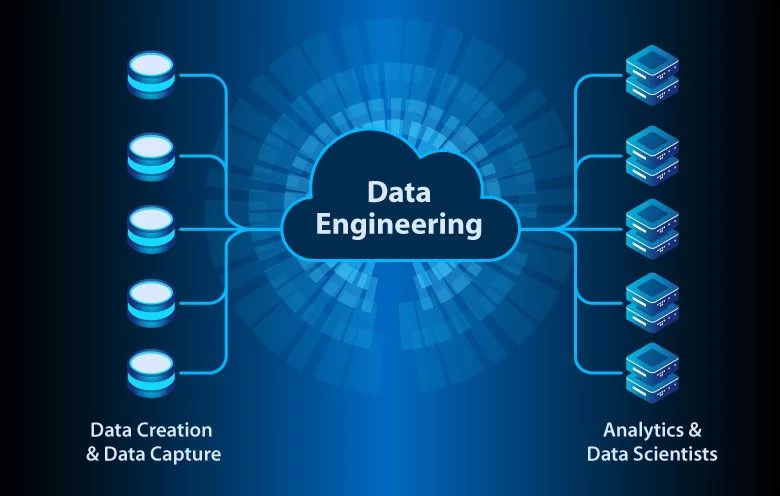

In the realm of big data, where data volumes are constantly growing, businesses rely on efficient data pipelines to manage, process, and transform their data for analysis. Here's where Python shines! Python's versatility and rich ecosystem of data engineering tools make it a powerful choice for building robust and scalable data pipelines.

"Python's readability and vast libraries make it an ideal language for developing data pipelines that can handle massive datasets efficiently."

Aniket Kumar, Data Engineer

Building Scalable Pipelines with Python

Python offers a comprehensive set of libraries and frameworks that streamline the data engineering workflow. Here are some key players:

- Pandas: For data manipulation and analysis, pandas provides high-performance, easy-to-use data structures.

- NumPy: The foundation for numerical computing in Python, NumPy offers powerful arrays and mathematical operations for data processing.

- Apache Spark: For large-scale data processing, Spark integrates seamlessly with Python and enables distributed computing on clusters.

- Airflow: A popular workflow management system written in Python, Airflow helps orchestrate and schedule data pipeline tasks.

Benefits of Python for Scalable Data Pipelines

Python offers several advantages for building scalable data pipelines:

- Readability and Maintainability: Python's code is known for its clear syntax, making pipelines easier to understand and maintain for data engineers of all experience levels.

- Extensive Libraries: The rich Python ecosystem provides a wide range of libraries specifically designed for data engineering tasks, simplifying development and reducing boilerplate code.

- Scalability and Performance: Python can handle large datasets effectively, and frameworks like Apache Spark further enhance scalability for distributed computing.

Conclusion

Python's ease of use, vast libraries, and focus on scalability make it an excellent choice for building data pipelines that can handle the ever-increasing demands of big data. As your data needs evolve, Python's flexibility allows you to adapt and scale your pipelines efficiently.

3 Comments

Priya Malik

June 3, 2024Excellent explanation! Python's clear syntax and rich ecosystem of libraries make it a strong choice for building data pipelines.

Sahil Sharma

June 3, 2024The scalability of Python for data pipelines is truly impressive, especially with frameworks like Apache Spark for handling massive datasets.

Aisha Kapoor

June 3, 2024This blog effectively highlights the key benefits of using Python for data engineering. As a data engineer myself, I find Python's user-friendliness and extensive libraries very valuable.